Demystifying the Performance Interference of Co-located Virtual Network Functions

Abstract— Network function virtualization (NFV) decouples network functions from the dedicated hardware and enables them running on commodity servers, facilitating widespread deployment of virtualized network functions (VNFs). Network operators tend to deploy VNFs in virtual machines (VMs) due to VM’s ease of duplication and migration, which enables flexible VNF placement and scheduling. Efforts have been paid to provide efficient VNF placement approaches, aiming at minimizing the resource cost of VNF deployment and reducing the latency of service chain. However, existing placement approaches may result in hardware resource competition of co-located VNFs, leading to performance degradation. In this paper, we present a measurement study on the performance interference among different types of co-located VNFs and analyze how VNFs’ competitive hardware resources and the characteristics of packet affect the performance interference. We disclose that the performance interference between co-located VNFs is ubiquitous, which causes the performance degradation, in terms of VNFs’ throughput, ranging from 12.36% to 50.3%, and the competition of network I/O bandwidth plays a key role in the performance interference. Based on our measurement results, we give some advices on how to design more efficient VNF placement approaches.

I. INTRODUCTION

As a flexible and economical technology for network function deployment, network function virtualization (NFV) draws more and more attention nowadays. It decouples network functions (NFs) from the dedicated hardware and enables them running on commodity servers, showing great potential for reducing capital expenditure of NF deployment and providing network operators an ability of on-demand scaling [1], [2].

Leveraging the virtualization technology, virtual network functions (VNFs) run in virtual machines (VMs) [3]. Unlike traditional middleboxes with fixed locations [4], VNFs could be deployed on any commodity server in the network by migrating VMs. However, this usage model raises new challenges. Firstly, a packet may need to go through a set of NFs. Network operators should carefully decide where these VNF instances should be placed among lots of candidate servers, to reduce end-to-end latency [5]. Secondly, to improve the resource utilization, VNF placement should keep pace with flow fluctuations by dynamically scaling the number of VNF instances. Finally, existing VNF instances may need to be consolidated to another server to free and shut down some servers for saving energy. Existing studies are devoted to tackling the above challenges and offering efficient VNF placement approaches. For instance, Li et al. design a real-time system, NFV-RT, to dynamically provision resources to meet traffic demand [6]. Eramo et al. propose a migration policy to tell network operators when and where they should migrate VNFs for saving energy [7].

However, all of the existing VNF placement approaches have not taken the performance interference among co-located VNFs into account. Although the virtualization technology provides a level of performance isolation among VMs, there is still significant performance interference among VNFs running on one shared hardware infrastructure [9]. As evidenced by our measurement in Sec. II-B, the performance of flow monitor, in terms of throughput in our work, decreases by 38.47% when the flow monitor runs with an intrusion detection system simultaneously. Performance interference among VNFs is brought by inherent resource sharing principle across VMs. A VNF has to compete with other co-located VNFs for resources, including network I/O bandwidth, CPU, memory, etc. [10]. The more intense the resource competition is, the more severe the performance interference is [11]. However, to maximize resource utilization and reduce power consumption, network operators tend to place VNF instances on the same physical servers as much as they can, resulting in fierce resource competition and severe performance interference [12]. An indepth understanding of performance interference among colocated VNFs is significant for efficient VNF management.

To address the issues above, in this paper, we classify VNFs into different types according to their packet operations, as summarized in Table. II, and measure the performance interference among different types of co-located VNFs. Firstly, we verify the existence and severity of the performance interference by measuring the performance of each VNF with different VNF combinations. Next, we analyze the resource usages of each VNF when VNFs run in pairs to explore the relation between resource and performance interference. Finally, we vary the level of packet characteristics, e.g., packet size and transmission rate, to evaluate their impacts on performance interference.

II. BACKGROUND AND MOTIVATION

In this section, we prove the existence of performance interference among VNFs from a theoretical perspective and an experimental perspective, respectively.

A. Background

Ideally, VMs’ performance would be independent of the activity going-on on the hardware, which is accomplished by smart scheduling and resource allocation policies. However, it is hard to realize the performance isolation of VMs in real world. Specifically, for example, the hyper-threading technology enables one physical core to simulate two logic cores (i.e., vCPU), which means that the vCPUs allocated to different VMs may share the same physical core and caches. When setting up a VM, we can specify the number of vCPU and allocate fixed size memory and disk, however, it is technically difficult to allocate network I/O bandwidth, memory bandwidth, and disk bandwidth to different VMs [13]. The inherent resource sharing principle across VMs may cause performance interference [14]. Furthermore, the effect of resource competition may be interactional, which means the competition of one type of resource may cause other resources’ competition [15].

As mentioned above, VNFs generally run in VMs and they would co-locate in the same server. Obviously, the performance interference among VNFs is inevitable and dependent on the activities/characteristics of VNFs. For example, frequent network I/O operations of VNFs may cause network I/O bandwidth competition, and packet header detecting as well as packet rewriting operations can lead to VNFs’ sensitivity to CPU and cache. However, how severe is the performance interference among VNFs; what is the key role in impacting VNFs’ performance; and how do we avoid performance interference during VNF placement? The answers still remain unknown.

B. Motivation

To reveal the severity of performance interference, we conduct a preliminary experiment with Snort[1] and Pktstat[2]which represent two types of VNFs listed in Table II. We deploy Snort and Pktstat on two co-located VMs to perform filtering and listening functions, respectively. We utilize another server to generate and send traffic to each VM. Table I shows the performance of Snort and Pktstat running separately and simultaneously. We observe that compared with the case of running separately, when running simultaneously, the processing capacities of Snort and Pktstat decrease by 35.51% and 38.47%, respectively. These results validate that the performance of VNFs will be cut down severely by the performance interference across co-located VNFs.

TABLE I

PERFORMANCE COMPARISON BETWEEN SNORT AND PKTSTAT RUNNING SEPARATELY AND SIMULTANEOUSLY.

| Running Separately | Running Simultaneously | |

| Snort | 955 Mbps | 615.88 Mbps |

| Pktstat | 169 Mbps | 103.99 Mbps |

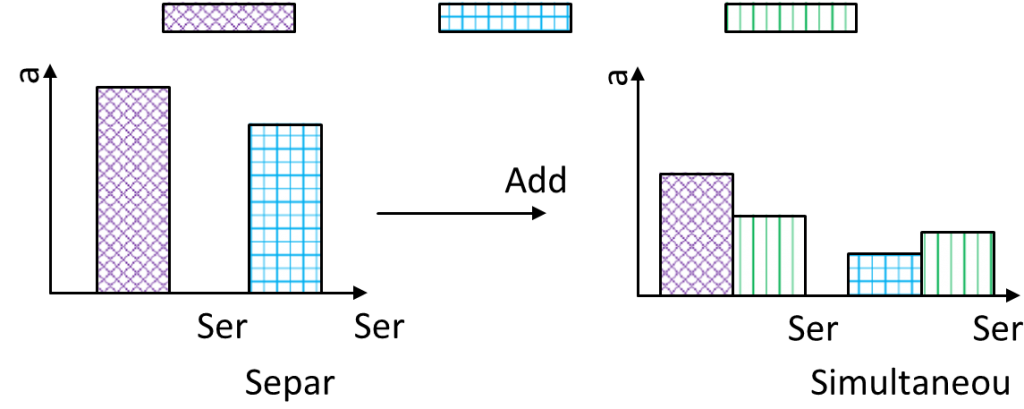

We now discuss about the effect of co-located VNFs’ performance interference and why we should take the performance interference into account while placing VNF instances. As illustrated in Fig. 1, there are two different types of VNFs, VNF 1 and VNF 2, each of which deals with different traffic. VNF 1 and VNF 2 are placed on server 1 and server 2, respectively.

| Server 1 |

| Server 2 |

| C |

| apacity |

| Separately |

| Add VNF |

| 3 |

| Server 1 |

| Server 2 |

| C |

| apacity |

| Simultaneously |

| VNF |

| 1: |

| VNF |

| 2: |

| VNF |

| 3: |

Fig. 1. A motivating example of co-located VNFs’ performance interference.

Now, the rising of network traffic calls for an additional

VNF 3. Network operators have two choices here: deploying

VNF 3 on server 1 or server 2, which are marked as plan 1 and plan 2, respectively. As shown in Fig. 1, both two choices cause significant performance degradation: the processing capacities of VNF 1 and VNF 2 running with VNF 3 separately are lower than their original processing capacities. It is worth noting that the performance degradation of VNF 2 is higher than that of VNF 1, and VNF 3’s processing capacity in plan 1 is higher than that in plan 2. If the network operators overlook the performance interference and select plan 2, the overall processing capacity will be lower than that in plan 1, resulting in much more profit loss. Moreover, each VNF suffers from a sudden and severe performance degradation no matter in plan 1 or plan 2 because of the interference. Thus, the added VNF 3 may not meet the requirements of the rising traffic, and the traffic processed by the original VNF may suffer from longer latency due to performance degradation, leading to unreliability. Existing VNF placement approaches, which do not take performance interference into account, cannot foresee this situation, resulting in unresponsive action. If the performance interference is taken into account, the performance degradation can be relieved by deploying VNF 3 on server 1, and VNF placement approach will prepare sufficient VNF instances to reduce latency. Above all, it is essential to attach importance to the performance interference among VNFs when adjusting the scale of VNF instances to follow the traffic fluctuation.

III. MEASUREMENT SETUP

A. Measurement Platform

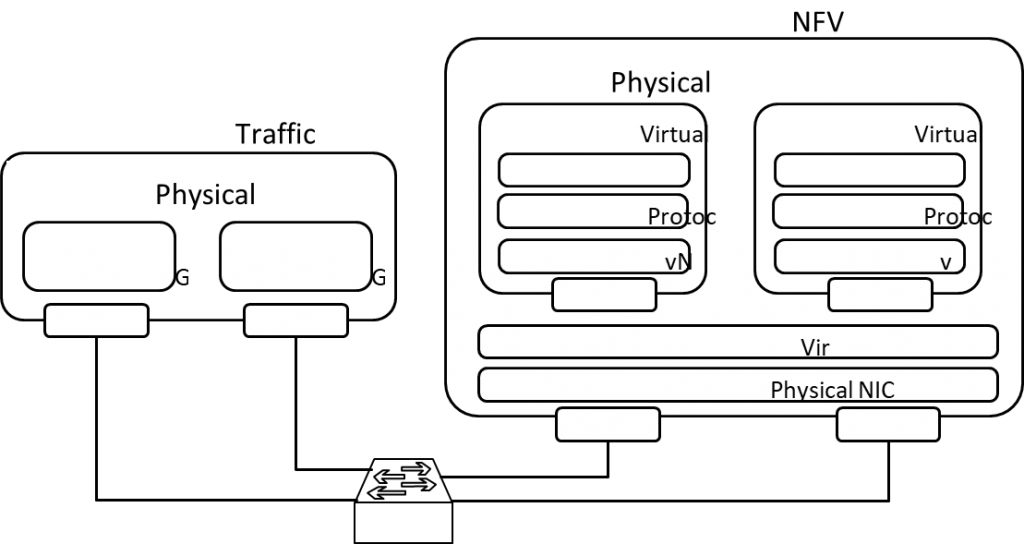

As shown in Fig. 2, we conduct the measurement on two commodity servers, one server is virtualized and acts as the NFV server, and the other server acts as the traffic server, sending packets to the NFV server. Each server is configured with an eight-core Intel Xeon E5-2620 v4 2.1 GHz CPU, a 64 GB memory, and two Intel I350 GbE network interface cards (NICs).

In the NFV server, each VNF instance runs on an exclusive

VM and each VM instance is equipped with 1 vCPU core, 2 GB memory and 10 GB disk. We utilize the traffic server to generate and send packets with varied packet sizes, and transmission rates. The NFV server receives and forwards

| Physical Machine

Physical Machine |

||||||||||||||||||||||||||||||||||||||||||||||||

| Virtual Machine

Virtual Machine |

| VNF 1

VNF 1 |

| Protocol Stack

Protocol Stack |

| vNIC Driver

vNIC Driver |

| vNIC

vNIC |

| Virtual Machine

Virtual Machine |

| VNF 2

VNF 2 |

| Protocol Stack

Protocol Stack |

| vNIC Driver

vNIC Driver |

| vNIC

vNIC |

| NIC

NIC |

| NIC

NIC |

| Virtual Swit

Virtual Swit |

| c

c |

| h

h |

| Physical NIC Driver

Physical NIC Driver |

| Physical Machine

Physical Machine |

| Traffic

Traffic |

| Generator

Generator |

| Traffic

Traffic |

| Generator

Generator |

| NIC

NIC |

| NIC

NIC |

| Traffic Server

Traffic Server |

| NFV Server

NFV Server |

Fig. 2. The measurement platform with two servers. The left server acts as the traffic sender and the right one is the NFV server where the VNFs run.

the packets to the corresponding VNFs. Throughput, response time, and packet loss are measured as performance metrics by packETH[3], siege[4], and iperf5. Note that throughput and latency are two important performance metrics in network, packet loss rate is consider for it can reveal the waste of resources [16]. Besides, to analyze the relationship between resource and performance, we record the CPU utilization, memory usage and cache usage using dstat[5], ps command and perf[6]. Each measurement is carried out 10 times independently, and we illustrate the average values as measurement results.

B. VNF Setup

From the aspect of packet processing, VNFs can be divided into six types which is shown in Table II. As depicted in Table II, these VNFs whose operations are reading packets comprise more than 75% in data centers, while the others which modify the packets account for less than 25% [17]. In the later case, Type VI is far less than Type IV and Type V, which is less than 5%. So, we choose the top five types as our measurement objects.

For each type of VNFs, we choose one common application as representative, as listed in Table II. The introduction of selected applications and their setups are discussed as follows.

- Iptables[7]. Iptables is an administration tool for IPv4 packet filtering and network address translation. Iptables in our measurement is equiped with an IP set which contains hundreds of IP addresses. It is used to check the IP address of each incoming packet using IP set and forward the packet to the destination server.

- Pktstat is a flow monitor displays a realtime summary of packet activity on an interface. By reading a packet header, Pktstat can get information about IP

TABLE II

TYPES OF VIRTUAL NETWORK FUNCTIONS AND APPLICATIONS.

| Type | VNF

Examples |

Ratio | Packet Processing | Application | ||

| IP | Port | Payload | ||||

| I | Gateway | >75% | R | – | – | Iptables |

| II | Firewall, Monitor | R | R | – | Pktstat | |

| III | NIDS, DPI, Caching | R | R | R | Snort | |

| IV | LoadBlancer, Proxy | <25% | R/W | – | – | Nginx |

| V | NAT | R/W | R/W | – | Nginx | |

| VI | VPN,

Compression, Encryption |

R/W | R/W | R/W | – | |

address, ports, packet type and size, and using this information to update the status of each connection.

- Snort. Snort is a network intrusion prevention system with real-time traffic analysis. Snort will check the header and content of each incoming packet, performing real-time monitoring of network transmission. In our measurement, we configure Snort with thousands of detection rules. When detecting illegal packets, Snort will discard these packets, send alerts to system and log anomalous actions. Such discarding operations consume the processing capacity of Snort, however, these processed and discarded packets cannot be measure by our measurement tools. To ensure the accuracy of measurement, Snort in our measurement only sends alerts and writes logs, disabling the packet discarding operation.

- Nginx[8]. Nginx is a proxy server, supporting HTTP, HTTPS, TCP, UDP, etc. In our measurement, Nginx acts as a load balancer. When the packets coming, it will modify the packet header and forward the packet to another server. It can also decide whether to modify the packet ports or not. Hence Nginx can be classed as both Type IV and Type V, as shown in Table II. In Type IV, Nginx will only modify the IP address of incoming packets before forwarding, while Nginx also modify the packet ports in Type V.

Note that the performance of NFs also depends on the characteristics of packets. For example, Snort will compare the IP address, ports and content of incoming packets with predefined rules. The cost of this table look-up operation varies with different packets. So, all the packets are generated with random addresses and ports, which approximately confirms to reality.

IV. MEASUREMENT RESULTS

In this section, we measure the performance interference of VNFs and identify the key factors in impacting the performance of VNF instances. We first investigate the impact of different VNF combinations on performance interference.

Then, we collect and analyze each VM’s resource utilization

| 0

0 |

| 0.2

0.2 |

| 0.4

0.4 |

| 0.6

0.6 |

| 0.8

0.8 |

| 1

1 |

| 1.2

1.2 |

| Type Ⅰ

Type Ⅰ |

| Type Ⅱ

Type Ⅱ |

| Type Ⅲ

Type Ⅲ |

| Type Ⅳ

Type Ⅳ |

| Type Ⅴ

Type Ⅴ |

| Normalized Throughput

Normalized Throughput |

| With Type Ⅰ

With Type Ⅰ |

| With Type Ⅱ

With Type Ⅱ |

| With Type Ⅲ

With Type Ⅲ |

| With Type Ⅳ

With Type Ⅳ |

| With Type Ⅴ

With Type Ⅴ |

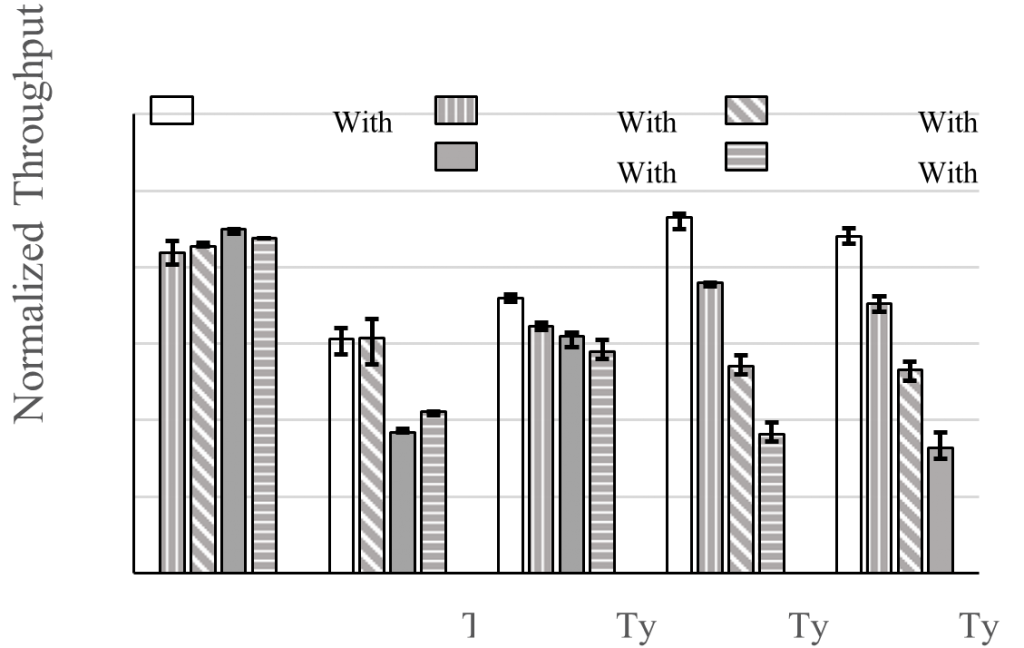

Fig. 3. Normalized throughput of each VNF in different pairs.

to identify the competitive resources on VNFs’ performance interference. Finally, we proceed to examine whether the characteristics of packet can impact the performance variation of VNF instances. For simplicity, we use the value when VNF runs separately as a baseline to compute variations in other contexts.

A. The performance interference between different VNF combinations

We first investigate the impact of VNF’s type on performance interference by measuring the performances of applications mentioned in Table II when they run in pairs. The performance metric used to evaluate performance interference here is “normalized throughput”, the ratio of the VNF’s throughput when it runs with other VNF to its throughput when it runs separately.

As depicted in Fig. 4, we find that the performance interference of co-located VNFs is ubiquitous. All types of VNFs suffer performance degradation when they run in pairs, because the normalized throughput is always less than 100%. We utilize “target VNF” to represent the VNF on the horizontal axis in Fig. 4, and the VNF running with the target VNF is called “competitor”. Obviously, with different target VNF or different competitor, the normalized throughput is different. For example, running with Type III, the normalized throughput of Type I is 85.41% while the normalized throughput of Type II is 61.53%. We find that no matter what target VNF is, when Type IV or Type V is the competitor, the normalized throughput of the target VNF is almost the lowest. As a result, Type IV and Type V are two of the most aggressive among these types. We then analyze the average normalized throughput of each type, as shown in Table III. We observe that among all types, Type II is the most sensitive as it suffers the lowest normalized throughput of down to 49.69%.

TABLE III

AVERAGE NORMALIZED THROUGHPUT OF TARGET VNF.

| Type | I | II | III | IV | V |

| Normalized Throughput | 87.64% | 49.69% | 64.07% | 64.78% | 61.14% |

We draw the major observations of this section as follows:

- The performance interference among VNFs is ubiquitousand severe, causing performance degradation ranging from 12.36% to 50.3%. For different co-located VNFs, the performance degradation is different.

- Type IV and Type V are two of the most aggressive among tested VNFs, because the normalized throughput of target VNF is always lowest when they are competitors.

- Type II is the most sensitive among tested VNFs, as its average normalized throughput is lowest among all VNFs.

B. Performance interference and resource competition

From the aspect of resource, the performance interference may be caused by resource competition of network I/O bandwidth, CPU, cache, and memory. In this section, firstly, we learn the effect of network I/O bandwidth competition on performance interference. Secondly, for other resources, we collect each VM’s resource utilization to identify competitive resources.

Since all VNFs belong to network I/O applications, the network I/O bandwidth must be one of important competitive resources [18]. Fig. 4 shows the performance degradation when VNFs run in different pairs with network I/O bandwidth guarantee. We find that all types of VNFs suffer network I/O bandwidth competition as the normalized throughput in Fig. 4 is higher than that in Fig. 3 where VNFs run without network I/O bandwidth guarantee. The performance degradation caused by network I/O bandwidth competition accounts for half of total degradation, meaning that network I/O bandwidth competition plays an important role in performance interference between VNFs. Compared with Fig. 4, the trends of normalized throughput are same except Type III. Without network I/O bandwidth guarantee, more CPU resource is consumed when VNFs receive packets from host server. The competition of CPU resource of Type III when it runs with Type IV or Type V is more serve, resulting in much more performance degradation of Type III. We also find that the level of performance interference caused by network I/O bandwidth competition of different co-located VNF pairs is different.

VII. CONCLUSION

In this paper, we classify VNFs into different types according to their packet operations, and present the study on performance interference among different types of co-located VNFs. To investigate the root cause of performance interference, we measure the VNFs’ performance with different combinations of co-located VNFs, varying packet sizes and transmission rates. We find that the performance interference among VNFs is ubiquitous and severe, while the interference level shows differences with different groups of co-located VNFs. The reason is that the co-located VNFs compete for resources, usually network I/O bandwidth, CPU, cache, and memory. We also observe that the performance interference will be severer when packet size and transmission rate increase. Accordingly, we give some suggestions on how to make the VNF placement approach more efficient.